My name is Hadrien Croubois and I am a Ph.D. student at ENS de Lyon. My research as a Ph.D. student focuses on middleware design for the management of shared Cloud-based scientific-computing platforms; and more particularly how to optimise them for workflow execution. However, my interests are much broader and include HPC, physics, and biology large-scale simulations, image rendering, machine learning and of course cryptography and blockchain technologies.

Since September 2017 I am also a scientific consultant for iExec. I met Gilles at ENS de Lyon and it was the perfect opportunity for me to experience working in a team designing innovative solutions.

My role as a member of this team is to study existing work from the research community and provide insight into the design of a proof-of-contribution protocol for iExec. This article is by no means a solution to this complex issue. It is rather an overview of our understanding and ideas regarding this issue.

The iExec platform provides a network where application provider, workers, and users can gather and work together. Trust between these agents is to be achieved through the use of blockchain technology (Nakamoto consensus) and cryptography.

Our infrastructure is divided into 3 agents:

The goal of the Proof-of-Contribution protocol is to achieve trust between the different agents, and more particularly between users and workers, in order for the users to be able to rely on the results computed by an external actor whose incentive is, at best, based on income. In particular, we want to achieve protection against Byzantine workers (who could provide bad results to penalize users) and users (who could argue against legitimate work performed by legitimate workers).

Validation of the work performed by the worker can be achieved in two different ways:

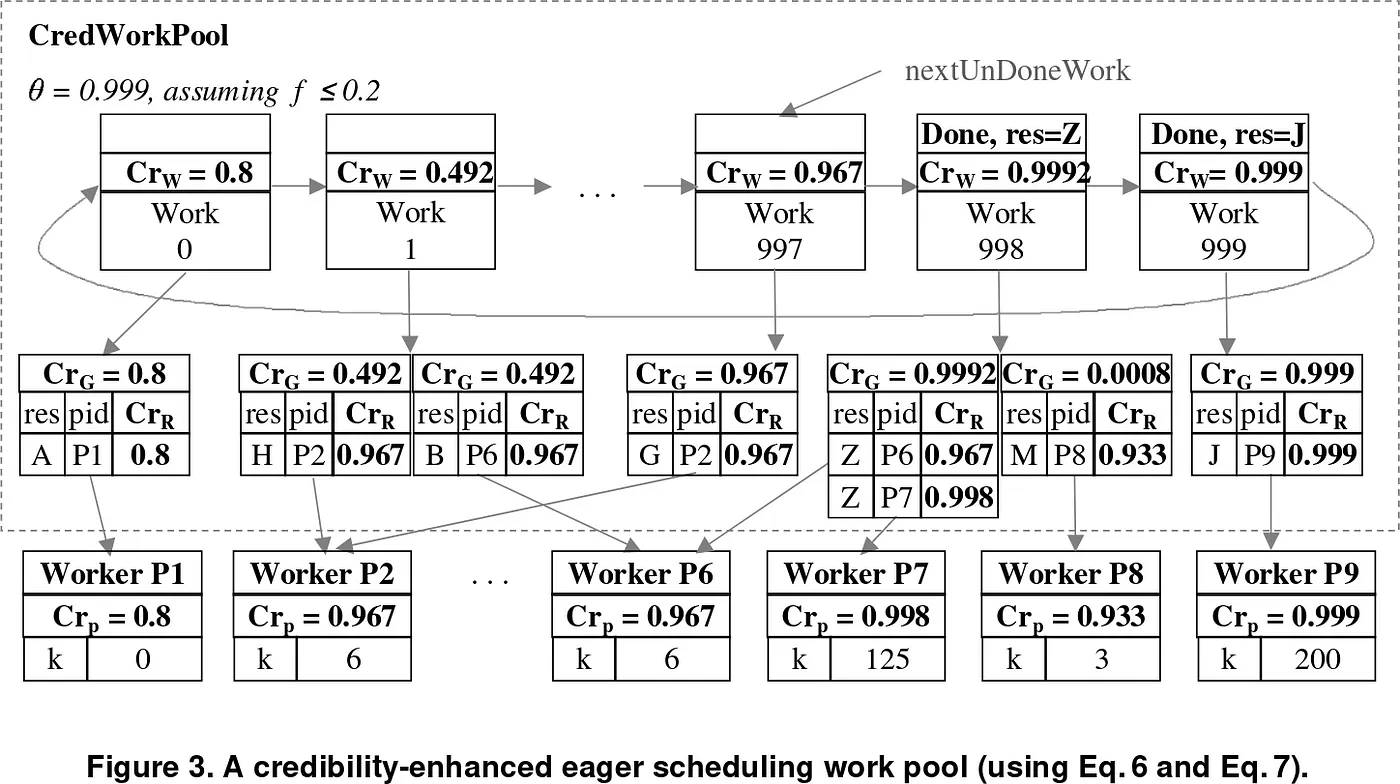

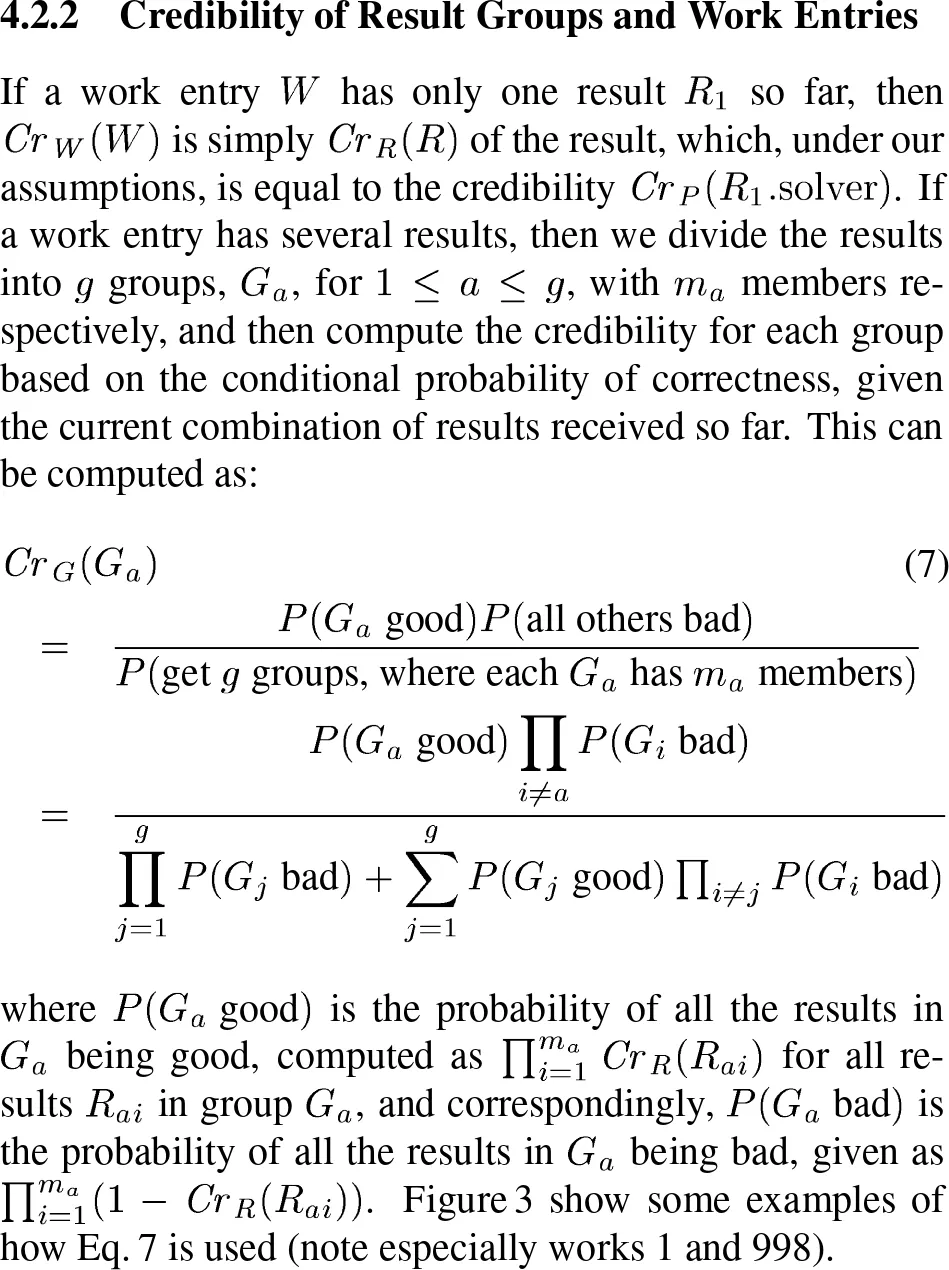

A significant contribution was published by Luis Sarmenta (2002. Sabotage-tolerance mechanisms for volunteer computing systems. Future Generation Computer Systems, 18(4), 561–572). The proposed approach is based on majority voting but rather than relying on a fixed m factor, it dynamically “decides” how many contributions are necessary to achieve consensus (within a specific confidence level). The replication level is therefore dynamic and automatically adapted, during execution, by the scheduler. This helps to achieve fast consensus when possible and to solve any conflicts.

This approach relies on worker reputation to limit the potential impact of Byzantine agents and to achieve consensus. Yet this approach is designed for desktop grid infrastructures, where money is out of the equation. Using the financial incentive of the different actors, we can modify and improve their approach to better fit our context:

The main drawback of Sarmenta’s article is the assumption that Byzantine workers are not working together and do not coordinate their attacks. While this assumption does not hold in our context, we believe we can still achieve it by selecting workers randomly among the worker pool. Therefore Byzantine workers controlled by a single entity should statistically be dispatched on many different tasks and should therefore not be able to overtake the vote for a specific task.

While Sarmenta’s work is interesting, a few modifications are required to work in our context. In this section, we discuss preliminary ideas on how we believe this work could be adapted to iExec needs. Our idea is to orchestrate the exchanges between the users and the workers as described below.

In addition to the users and workers, we have an additional component: the scheduler. Schedulers manage pools of worker and act as middlemen between the blockchain (listening to the iExec smart-contract) and the workers. A scheduler can, in fact, be composed of multiple programs which complementary features but we will here consider it as a single “virtual” entity.

One should notice that our discussion here does not deal with the scheduling algorithm itself. In a scheduler, the scheduling algorithm handles the logic responsible for the placement of jobs and handles execution errors. The scheduler is free to use any scheduling algorithm it desires as long as it can deal with step 3 and 5 of the following protocol.

Sarmenta’s voting helps to achieve the given level of confidence using worker reputation and dynamic replication. This confidence level is defined by a value ε which describes the acceptable error margin. Results should only be returned if a confidence level higher than 1-ε is achieved.

This value is a balance between cost and trust. A lower ε means more confidence in the result, but also requires more reputation/contributions to achieve consensus, and therefore more work to be performed. While this value could be defined by the user for each task, they might not know how to set it and it might cause billing issues.

We believe this value should be fixed for a worker pool. Therefore the billing policy could be defined for a worker pool depending on the performance of the workers (speed) and the ε value used by this worker pool scheduler (level of confidence).

The user would then be free to choose between worker pools. Some worker pools might only contain large nodes running technology like Intel SGX to achieve fast result with low replication. Other worker pools could contain (slower) desktop computers and have their consensus settings adapted to this context.

With consensus managed by the scheduler and financial opportunities for late voters provided by the security deposit of opposing voters, the users should not worry about anything. Users pay for a task to be executed on a pool of worker, regardless of the number of workers that end up involved in the consensus.

If consensus is fast and easy the payment of the user is enough to retribute the few workers who took part in the vote. If the consensus is hard and requires a lot of contributions, the workers are retributed using the security deposit of losing voters. This gives the workers a financial incentive to contribute to a consensus with many voters without requiring the user to pay more.

In the current version of this work, the protocol is such as the user has no part in the consensus. Payments are done when submitting the task and no stake is required. Results are public and guaranteed by the consensus. Users can therefore not discuss a result.

We believe the protocol described previously to be secure providing a few assumptions are met :

Finally, we believe that both scheduler and workers will be inclined to work correctly in order to provide a good service to the users and benefit from the iExec ecosystem. Having 51% of the reputation controlled by actors wanting to do things right and benefit from it should not be an issue.

Incentives for the different agents are as follows

In order to make money, the scheduler requires users to submit jobs and workers to register in its worker pool. This gives him the incentive to manage the worker pool correctly and grow strong.

One of the key elements that could ultimately help a scheduler getting bigger and attracting more workers and users is to be open about its decisions. We believe that a scheduler could rely on a blockchain mechanism to orchestrate the protocol described above.

In fact, this protocol is designed so that every message can, and should, be public. Security is achieved using cryptography. In particular, the use of a blockchain solves the issue of proving a contribution existence (presence on the blockchain) and validity (precedence to the vote results).

The main issue that still has to be solved is the worker designations. At step 3, the scheduler submits the task to specific workers. This is important for two reasons:

We don’t want workers to race. This would favor fast nodes and one could attack the voting system by coordinating many fast nodes to take over the vote before other nodes can contribute.

We don’t want malicious nodes to take over some votes. By randomly assigning workers to jobs we distribute malicious nodes amongst many votes where they would not be able to take over and where their best play is to provide good results and benefit from the platform working correctly.

Such a mechanism requires a source of randomness which any observers of the blockchain can agree on. This problem is beyond the scope of this post. Having such a source of entropy could help the scheduler designate workers using a random yet verifiable algorithm.

The data required for verification would be public. The only change required to the protocol would be that a valid contribution from a worker would require a proof that the worker was designated by a scheduler.